Two sessions to show a pathway for world to overcome mistaken view of group confrontation: German think tank founder

Editor's Note:

This week, China kicked off the two sessions, one of the most important political gatherings annually. The event offers an important window for the outside world to understand China's development, and where the world's second-largest economy is headed and top policy priorities in 2024.

What are the world's general expectations of the two sessions? And how do foreign economists and think tanks view China's growth potential? Recently, Global Times reporter Li Xuanmin (GT) conducted an interview with Helga Zepp-LaRouche (HZL), founder of Germany-based political and economic think tank the Schiller Institute.

GT: China is holding the annual two sessions this week. What are your expectations for the meeting?

HZL: I expect that it will address strategic challenges that pose barriers to global development, based on China's vision to build a global community with shared future. Of special interest for me will also be to learn what the idea of building "new quality productive forces" will actually mean, since the Chinese economy is already the locomotive of the world economy.

Mankind is clearly at a branching point. Since geopolitics is the curse of history, I am hopeful that China's two sessions will show a pathway for the world, and help the world understand how to overcome the mistaken view that a country or a group of countries must defend its interests against another group by all means. It is quite possible for China to establish a new paradigm, where the interests of all can be taken care of. That is a new system, which allows for the development of all.

GT: What is the significance of the political gathering amid rising global headwinds? And from your perspective, what role will China and the Chinese economy play in the world this year?

HZL: Chinese foreign policy has proven to be an anchor of stability. The biggest danger now is the two regional conflicts: the Russia-Ukraine conflict and the Israel-Palestine conflict. And China has made comprehensive diplomatic proposals for both crises, showing the path to a peaceful solution.

The extension of the China-proposed Belt and Road Initiative (BRI) could play a decisive role in reconstruction for the countries involved in conflict in the context of a regional development perspective. The Schiller Institute has also proposed a concrete economic development plan, the Oasis plan, which could bring peace for all countries involved.

GT: From your perspective, what will be the focal points of China's economic work this year? What are your estimates for key economic figures that will be set during the two sessions?

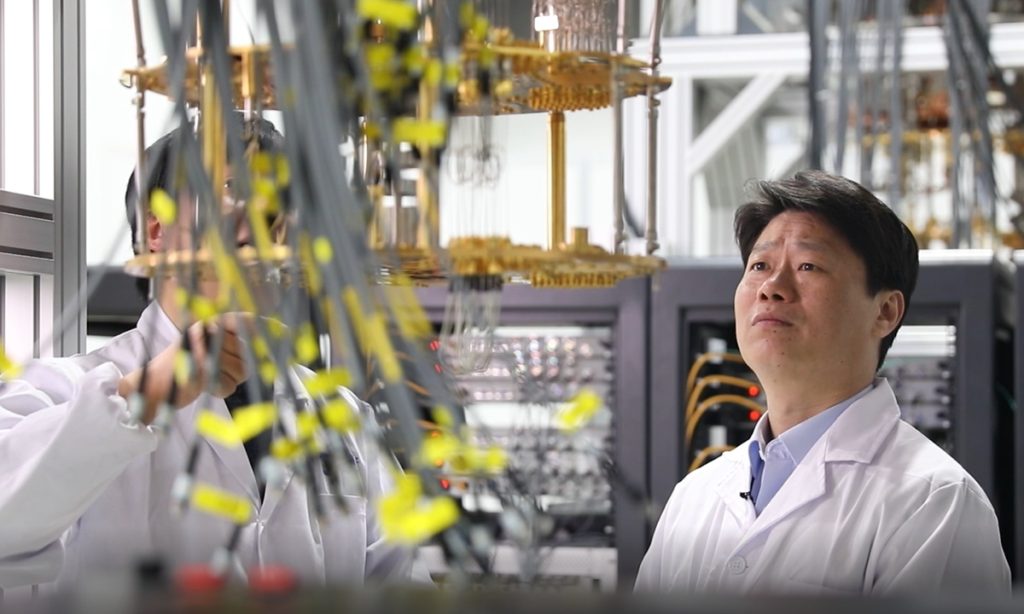

HZL: The economic work focus this year will be on boosting high technology, high efficiency and high quality of production. Given the high number of scientists and engineers China has, and the addition of around 11 million university graduates per year, the transformation of basic scientific and technological breakthroughs into real production in the Chinese economy will be very significant.

GT: What role do you expect the Chinese economy to play in the world this year, amid the complex geopolitical situation?

HZL: There have been increasing tendencies of decoupling and "de-risking," which really amount to the same thing. When the EU is preparing tax barriers against Chinese imports, they are further isolating themselves to their own detriment. I expect China to be a strong advocate for multilateralism, and to build cooperation with the Global South, where there have been tremendous efforts in transforming from exports of raw materials toward economic models based on the value chain.

Also, naturally the BRICS-Plus - of which China is a founding member - will gain increasing importance this year, and include new credit mechanisms that promote development for all participants.

GT: The BRI is also high on the agenda of the two sessions. From your perspective, how will China chart a new BRI blueprint in the 11th year of its development?

HZL: Since the development requirements of the Global South are gigantic, in the next decade, the China-proposed BRI offers plenty of opportunities for all nations to work together for their mutual benefit. And if all countries can bear in mind such a perspective, the next decade of the BRI can unleash the creative potential of billions of people.

In terms of European countries, especially Germany and France, they are experiencing a dramatic economic slowdown. So hopefully there will be a greater openness to respond to what the BRI has to offer.